dm = LibriSpeechDataModule(

target_dir="../data/en",

dataset_parts="mini_librispeech",

output_dir="../data/en/LibriSpeech",

num_jobs=1

)Speech to Text Datasets

Speech to text datasets

Base class

LibriSpeech DataModule

Usage

# skip this at export time to not waste time

# download

dm.prepare_data()# libri = prepare_librispeech("../data/en/LibriSpeech", dataset_parts='mini_librispeech')#! rm ../data/en/LibriSpeech/*.gzdm.setup(stage='test')

dm.cuts_test--------------------------------------------------------------------------- KeyError Traceback (most recent call last) Cell In[16], line 2 1 #| notest ----> 2 dm.setup(stage='test') 3 dm.cuts_test Cell In[3], line 25, in LibriSpeechDataModule.setup(self, stage) 23 self.tokenizer.inverse(*self.tokenizer(self.cuts_test.subset(first=2))) 24 if stage == "test": ---> 25 self.cuts_test = CutSet.from_manifests(**self.libri["dev-clean-2"]) 26 self.tokenizer = TokenCollater(self.cuts_test) 27 self.tokenizer(self.cuts_test.subset(first=2)) KeyError: 'dev-clean-2'

recs = RecordingSet.from_file("../data/en/LibriSpeech/librispeech_recordings_dev-clean-2.jsonl.gz")

sup = SupervisionSet("../data/en/LibriSpeech/librispeech_supervisions_dev-clean-2.jsonl.gz")

print(len(recs),len(sup))1089 68test_dl = dm.test_dataloader()

b = next(iter(test_dl))

print(b["feats_pad"].shape, b["tokens_pad"].shape, b["ilens"].shape)

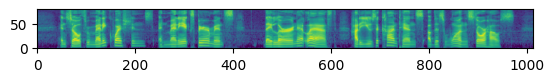

plt.imshow(b["feats_pad"][0].transpose(0,1), origin='lower')

# dm.tokenizer.idx2token(b["tokens_pad"][0])

# dm.tokenizer.inverse(b["tokens_pad"][0], b["ilens"][0])torch.Size([39, 1014, 80]) torch.Size([39, 178]) torch.Size([39])

print(dm.cuts_test)

cut = dm.cuts_test[0]

# pprint(cut.to_dict())

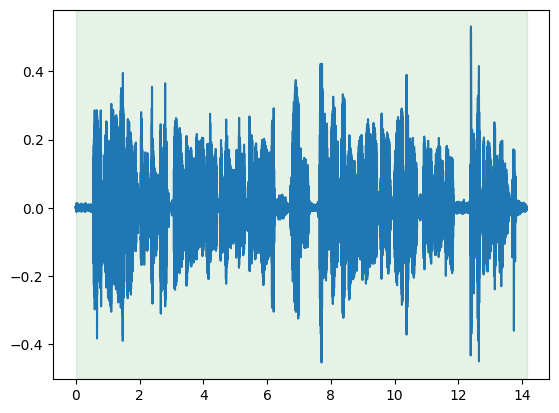

cut.plot_audio()CutSet(len=1089) [underlying data type: <class 'list'>]